Do These 5 AI Chip Startups Pose a Threat to Nvidia?

Table of contents

They say that a parent who claims to love all of her children equally is either a liar or doesn’t know her rug rats very well. While we can truthfully say we’re equally annoyed by all kids, we can relate to the above sentiment when it comes to some stocks. In other words, we have our favorites. One of the star performers highlighted in the Nanalyze Disruptive Tech Portfolio is Nvidia (NVDA), the first AI stock we ever covered back in 2017. The company turned its hardware, originally designed for computing-intensive gaming software, into the premiere AI chips for most applications in artificial intelligence. Can it retain that dominance with the rise of well-funded AI chip startups?

AI Chip Market Heats Up

The AI chip market is perhaps one of the most dynamic and competitive industries going. In fact, we recently wrote about how AI chips are rapidly changing the semiconductor industry. (It’s a good read for those who don’t know an AI chip from a potato chip.) While we still believe that Nvidia and its graphic processing unit (GPU) technology is a good way to invest in AI chips, as investors we want to keep tabs on threats to the bottom line.

There are the obvious competitors like Intel (INTC), which has been making big investments in AI chips, autonomy, and chip design. Google (GOOG) introduced its own AI chips just a few years ago, while Apple (AAPL) is designing AI chips for the company’s iconic smartphones. Chinese AI chip startups have made little attempt to hide their ambitions to unseat Nvidia, though like many upstarts, they mostly focus on AI edge computing applications. These chips are designed to provide enough computing power in IoT devices like smart video cameras or in smartphones without the need for remote (i.e., cloud) servers to do all of the heavy thinking.

Nvidia’s announcement in September that it would acquire UK-based Arm Holdings, a semiconductor design company, for $40 billion touted the merger as “creating world’s premier computing company for the age of AI.” The acquisition buys Nvidia a leader in chip design for the mobile space. Arm has also been rapidly expanding its AI-specific hardware offerings, including a miniaturized neural processing unit (NPU) for edge devices that is normally used in data centers where Nvidia has dominated. In fact, nearly 70% of the world’s fastest supercomputers now incorporate Nvidia hardware.

Suped-up data centers and edge computing are where McKinsey & Company predicts most of the growth from AI-related semiconductors will come in the next five years. The research firm projects annual growth of about 18% between now and 2025, when AI chips could account for almost 20% of all demand, as shown above. That translates into about $67 billion in revenue. For reference, Nvidia as the market-dominant player recorded more than $11.7 billion in revenue in 2019 – and half of all sales still come from gaming.

AI Chips Startups for Data Centers

All that means there’s a lot of market share to grab in the next few years. Not only must Nvidia contend with a host of billion-dollar public companies, but plenty of AI chip startups have emerged in recent years. In this article, we want to take a look at five of the most highly touted private companies poised to commercialize their technology in the data center market. While we’ve covered all but one of these startups in previous articles, most were in stealth mode, have raised substantial funding since our last coverage, or are simply making headlines with technological breakthroughs.

World’s Most Well-Funded AI Chip Startups

Two of the startups on our list have raised more than $900 million between the two of them, with a combined value of more than $4 billion, though UK-based Graphcore and Silicon Valley-based SambaNova take very different approaches to AI chip solutions.

Founded in 2016, Graphcore has raised $460 million from more than two dozen investors, with a valuation of $1.95 billion. The company took in $150 million in a Series D back in February and there are rumors just this month that it’s looking to add another $200 million to the war chest. Graphcore has created an AI chip it calls an intelligence processing unit (IPU) that, as we explained before, sacrifices a certain amount of number-crunching precision to allow the machine to tackle more math more quickly with less energy. This year it threw down the gauntlet to Nvidia when it released its latest IPU, the Colossus MK2, and packaged four of them into a machine called the IPU-M2000:

About the size of a DVD player (do they still sell those?), the IPU-M2000 packs one petaflop of computing power. One petaflop is a quadrillion calculations per second, and the world’s fastest supercomputer, Japan’s Fugaku, is rated at a world-record 442 petaflops (using Arm’s chip architecture, incidentally). Fortune reported earlier this year how Graphcore could compete with Nvidia in the supercomputer chip race. In a test using a state-of-the-art image classification benchmark, eight of Graphcore’s new IPU-M2000 clustered together could train an algorithm at a cost of $259,000 compared to $3 million for 16 of Nvidia’s DGX clusters, each of which contains eight of the company’s top-of-the-line chips.

Update 01/07/2021: Graphcore has raised $222 million in Series E funding to support its global expansion and to accelerate the development of its intelligence processing units (IPUs), which are specifically designed to power artificial intelligence software. This brings the company’s total funding to $682 million to date.

Founded in 2017, SambaNova has raised about $465 million, including a $250 million Series C in February that catapulted the company to a valuation of $2.5 billion. It has attracted funding from some big names like Google, investment management company BlackRock (BLK), and Intel, among others. SambaNova has taken what’s been called a hybrid approach to AI chip design by giving equal emphasis to both the hardware and software. An article last month in The Next Platform, which specializes in coverage of high-end computing like supercomputers and data centers, reported about how SambaNova is generating interest from national laboratories that host supercomputers. Specifically, the startup’s latest DataScale computers were hooked up to the Corona supercomputer and performed five times better against Nvidia’s V100 GPUs.

Update 04/13/2021: SambaNova Systems has raised $676 million in Series D funding to aggressively challenge legacy competitors as it continues to shatter the computational limits of AI hardware and software currently on the market. This brings the company’s total funding to $1.1 billion to date.

World’s Biggest AI Chip

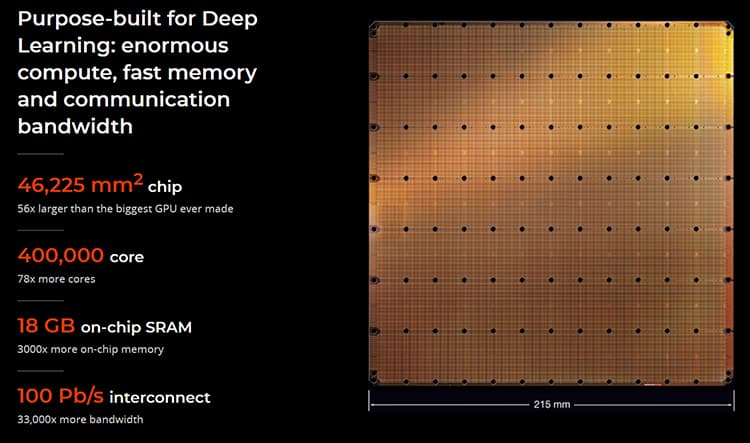

Another Silicon Valley-based AI chip startup, Cerebras Systems, has also made its way into the nation’s supercomputer laboratories. Founded in 2016, the Silicon Valley-based startup has raised $112 million from a handful of well-known Silicon Valley/San Francisco venture capital firms, including Sequoia Capital, Benchmark, and Foundation Capital. The company only emerged from stealth mode last year, boasting the world’s largest chip, the Wafer Scale Engine (WSE), which is 56x the size of the largest GPUs on the market:

We’re finally getting a look at why Cerebras thinks bigger is better. Just one WSE powers the company’s new CS-1 computer, which is designed to accelerate deep learning in the data center. The hardware used today to train neural networks to do things like drive autonomously takes weeks, if not months, and tons of power. The company claimed in an article by IEEE Spectrum that just one CS-1 computer could easily outmuscle the equivalent of a cluster of Google AI computers that consume five times as much power, take up 30 times as much space, and deliver just one-third of the performance. More recently, Cerebras demonstrated its AI brawn when the CS-1 beat the world’s 69th fastest supercomputer in a simulation of combustion in a coal-fired power plant. In addition to national laboratories, the company’s biggest known customer is the drugmaker GlaxoSmithKline (GSK).

AI Chips for Fast Inference

While we’ve mostly been talking about AI chip applications for training and simulation, startups like Groq out of Silicon Valley and Tenstorrent from Toronto are working to accelerate the actual execution of AI tasks by neural networks, known as inference. This is when an AI-trained system makes predictions from new data.

Founded in 2016, Groq has raised about $62 million in disclosed funding, though it remained mum on how much money it took in from its latest fundraising back in August. The startup’s founder, Jonathan Ross, was the brains behind Google’s in-house AI chip, the Tensor Processing Unit (TPU). The Groq (a play on the sci-fi author Robert Heinlein’s term grok, which means to understand intuitively) Tensor Streaming Processor (TSP) reputedly more than doubles the performance of today’s GPU-based systems.

How? Well, we’re MBAs and not semiconductor manufacturers, but basically, the company has redesigned the chip architecture. Instead of creating a small programmable core and replicating it dozens or hundreds of times, the TSP houses a single enormous processor that has hundreds of functional units. And, like SambaNova, the software is integral to creating the sort of efficiencies in computing power that could make Nvidia investors a wee bit nervous. The company started shipping its Groq node capable of six petaflops per machine in September, targeting cloud computing for industries like autonomous driving and fintech.

Update 04/15/2021: Groq has raised $300 million to hire more employees and speed up the development of new products. This brings the company’s total funding to $362.3 million to date.

Yet another AI chip startup founded in 2016, Tenstorrent has raised $34.5 million. The company has dubbed its AI chip Grayskull, and the design mirrors those of others in our list by doing things like drastically increasing the amount of on-chip memory versus Nvidia, which relies on fast off-chip memory. Another strategy that Tenstorrent employs – and sounds similar to Graphcore, at least in principle – is to build a chip that works like the human brain in that it can draw quick conclusions without processing massive amounts of data. (You just have to look around you to see how well that works for humans.) Anyway, the idea is to compress or eliminate extraneous data that takes more energy to burn. An analysis by the Linley Group, for example, found that Grayskull uses only 75 watts of power to perform 368 trillion operations per second against about 300 watts consumed by Nvidia for the same performance.

Update 05/24/2021: Tenstorrent has raised $200 million at a post-money valuation of $1 billion to build a sustainable roadmap to challenge Nvidia in the AI market. This brings the company’s total funding to $234.5 million to date.

Conclusion

There is no doubt that Nvidia is the leading AI chipmaker on the planet today, and that dynamic isn’t likely to change anytime soon. However, the five startups featured here are all starting to commercialize their hardware solutions after three or four years of development. They promise better performance, using less power and space, and at reduced cost. Several also claim their AI chip design can scale from data center down to edge devices, meaning less R&D to introduce new applications.

Nvidia has been criticized at times for lagging in innovation, and its hardware largely remains expensive and power hungry. Nvidia’s pending acquisition of Arm is probably more important to the company’s long-term success as a traditional semiconductor manufacturer than its supremacy in AI chips. Investors would like to see Nvidia maintain its high-growth potential, and that could mean adopting (i.e., acquiring) some of the AI chip innovations being championed by these five nimble startups.

Sign up to our newsletter to get more of our great research delivered straight to your inbox!

Nanalyze Weekly includes useful insights written by our team of underpaid MBAs, research on new disruptive technology stocks flying under the radar, and summaries of our recent research. Always 100% free.

nextbigfuture: Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, unveiled Andromeda, a 13.5 million core AI supercomputer, now available and being used for commercial and academic work. Built with a cluster of 16 Cerebras CS-2 systems and leveraging Cerebras MemoryX and SwarmX technologies, Andromeda delivers more than 1 Exaflop of AI compute and 120 Petaflops of dense compute at 16-bit half precision. It is the only AI supercomputer to ever demonstrate near-perfect linear scaling on large language model workloads relying on simple data parallelism alone.

Hopefully they use all this power that’s been unleashed to solve bigger problems than building a convincing chatbot.